When we talk about Self-Driving Cars (SDC) or Autonomous Vehicles (AV), what exactly do we mean?

At a high level, this question seems kind of self explanatory: a self-driving car is one that drives itself, autonomously. But what does autonomy imply? What systems or sub-systems of the vehicle are autonomous? Can it, for example, see what is around? Pedestrians, other vehicles, obstacles, road signs? Can it maneuver itself around bends and turns and traffic lights, is that autonomy? Can it brake safely? Does that count? What is “safe” mean?

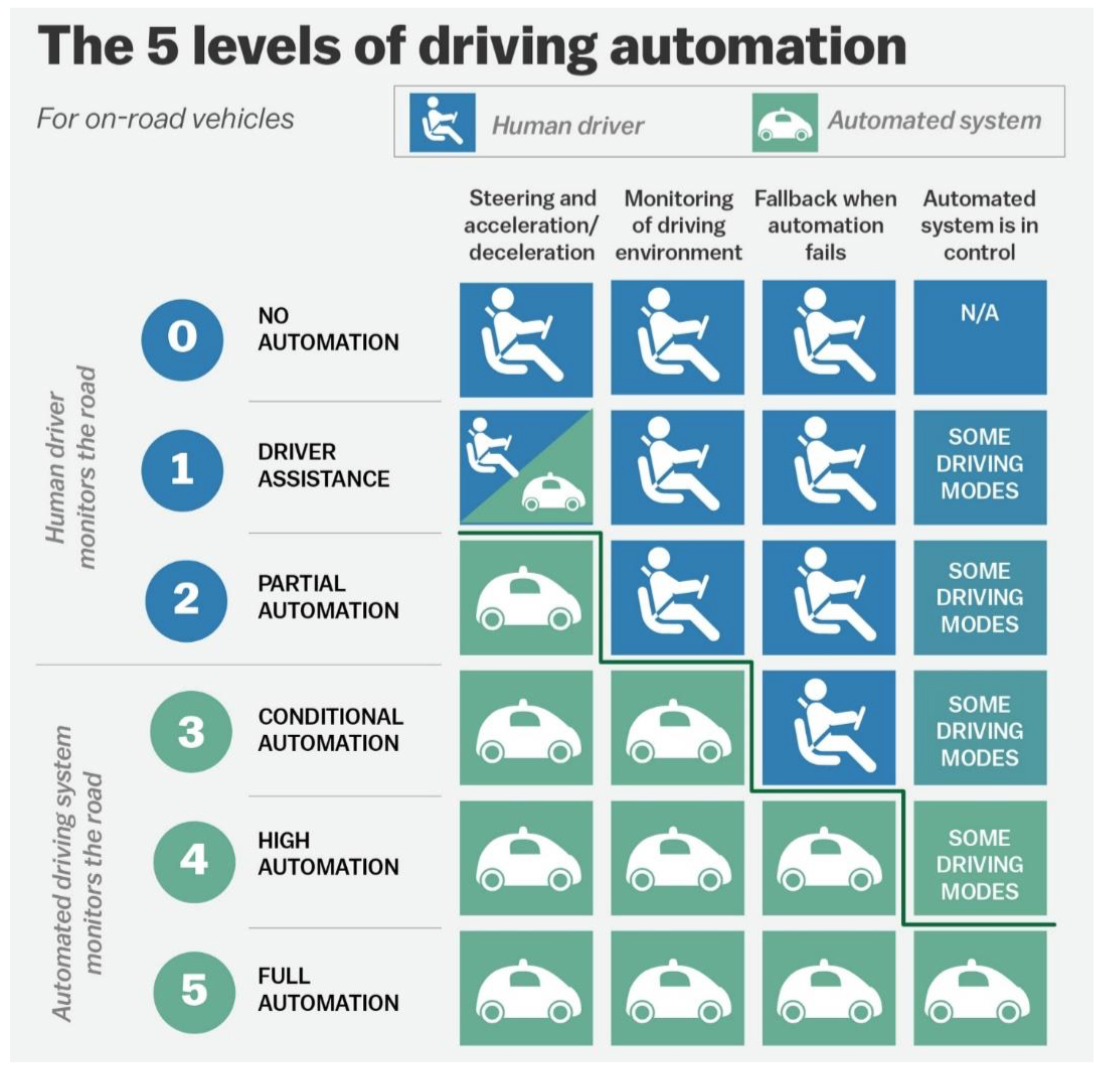

To answer exactly these questions, there’s a framework from [SAE International] (https://saemobilus.sae.org/content/j3016_201401) that defines distinctive levels of autonomy and what these imply.

Levels of Autonomy

Level 0: No Automation Nothing is automated. All controls are human (driver) controlled: steering, braking, throttle (gas pedal), etc.

Level 1: Driver Assistance Most functions are driver controlled, but some specific functions (e.g. steering, braking) are automated. Example: anti-lock brakes.

Level 2: Partial Automation At least one driver assistance system of “both steering and acceleration/ deceleration using information about the driving environment” is automated. Example: adaptive cruise control, or lane centering. The driver is still in control, and must be ready to take over the vehicle at any time.

Level 3: Conditional Automation Drivers are required in the vehicle, but are able to completely shift safety critical functions to the vehicle, under certain conditions. The driver is still required, but is not required to monitor the environment continuously. The driver may be required to take over at any time though.

Level 4: High Automation This is colloquially referred to as the “self-driving” mode. The vehicle can drive autonomously and perform safety critical functions and monitor driving conditions. However, it does not mean under all circumstances, e.g. driving under snowy or stormy conditions, etc. In these situations, a human driver is required.

Level 5: Full Automation This means all functions are performed by the vehicle, under all driving conditions, including driving under extreme conditions. This is considered the holy grail of autonomy.

So, where are we today? There’s a lot of progress being made by various companies, but my estimate is we are somewhere near Level 3, and getting closer to Level 4 (in the next 5 years or so).

The above framework is good for policy discussions, such as for regulating SDC/AVs on the road, performing tests both real-world or otherwise, comparing vehicular capabilities, and perhaps for marketing purposes. However, this framework is not particularly useful from an engineering design perspective.

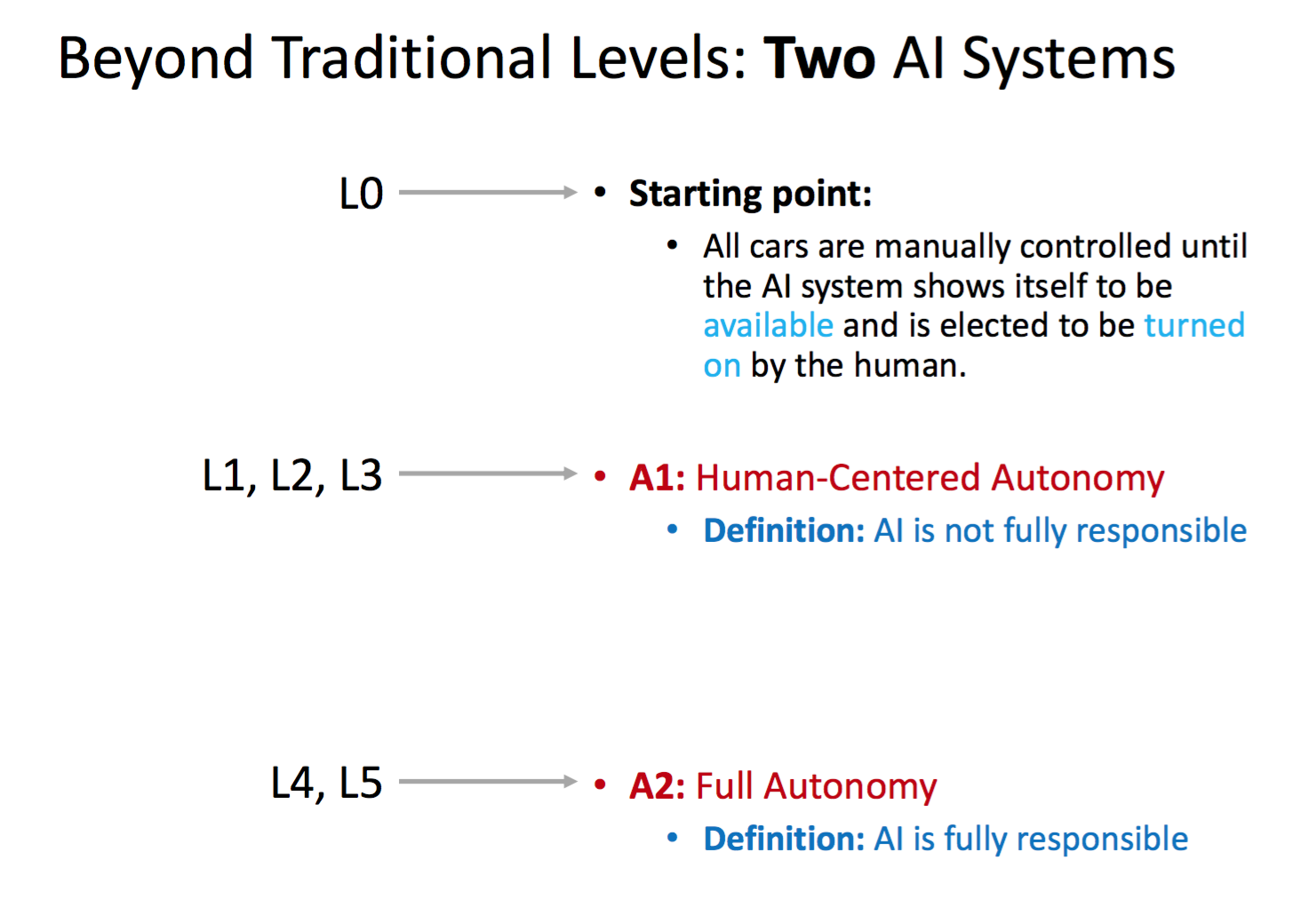

Lex Fridman at MIT introduces a different perspective. He proposes the following framework for autonomy:

Human-centered Autonomy In this, the AI (automated functions) is not fully responsible, across features/functions such as “availability” (when/where is automation available), e.g. highway driving, traffic, sensor-based control, how many seconds available for human-intervention (1 second, 10 seconds, etc)

Full Autonomy In this, AI is fully responsible, and no human intervention is necessary, there is no tele-operation (human remote control), there’s no 10-second take over rule (AI can ask human to take over, but not guaranteed to receive it), and humans can choose to take over.

(credit: Lex Fridman, MIT)