So it has been nearly two months since the first self-driving car fatality (see my previous post here). I deliberately held off writing more on this until new facts emerged.

And it looks like now we finally know the cause of the accident.

Investigation and new facts

Following the accident, Uber quite rightly withdrew all its autonomous vehicles from road tests. This is not just a safety precaution — after all, these vehicles are under test — but is also likely a legal requirement as part of the investigation.

An investigation followed which was jointly conducted with the National Transport Safety Board (NTSB). To Uber’s credit, the investigation was quick, and the data was indeed shared. We are still awaiting full results.

The video released by the investigators [1] (caution: the video has graphic content of the collision, use your judgment) clearly shows the pedestrian Elaine Herzberg walking her bicycle at night, not at an intersection, across the road. The Volvo XC90, equipped with a top mounted lidar, clearly collided with the pedestrian without stopping. An internal dash cam shows the backup safety driver (who was perhaps momentarily not paying attention) suddenly shocked at the approaching collision. It is indeed a disturbing video.

After watching the video, my subsequent analysis was that:

- the sensors probably malfunctioned due to low light (but the lidar is meant to detect objects even in very low visibility conditions)

- the prediction software didn’t appropriately signal an intervention, or

- the intervention time was too short (< 2 seconds), which likely resulted in the collision

It turns out that perception system did infact detect the pedestrian, as reported by The Information, but it rejected the detection as a “false positive” and the vehicle continued operating resulting in the unfortunate collision.

So it was indeed a software glitch that caused the collision.

What This Means

What does a false positive in the perception system mean? It means the system does not have enough confidence that an object is detected. The confidence level is a tunable parameter that affects not just the perception module, but the entire system performance.

A high confidence detection implies that every object - no matter how small or relevant - will be accurately detected. We, as passengers, drivers or indeed pedestrians on the road, want a high-confidence detection for our own safety. But that also implies that the autonomous vehicle will slow down or indeed stop at every object thus detected. It thus implies a jerky and an uncomfortable ride in the autonomous vehicle.

On the other hand, a low(er) confidence detection implies the opposite - objects with low confidence detection (such as trash on the road, a plastic bag, etc.) could be ignored, and thus the AV does not have to pause as frequently. It implies a smoother ride.

Clearly, this tunable level of confidence has large implications - both for occupants of the AVs, as well as pedestrians, bicyclists and other people on the road. Ideally, we want both a high confidence detection for safety purposes, and a comfortable ride.

This essential trade-off is at the crux of most machine learning systems and indeed with all self-driving vehicles. How we resolve this, and all other trade-offs, depends largely on our thrust for automation, for comfort, our public policy frameworks, regulations for safety of autonomous cars, and more.

What’s Next for the Industry

In the time since the first self-driving car collision, two other accidents have occurred. First was a Tesla Model X running on autopilot that ended up killing the driver right in Mountain View; the investigation is still going on.

Second is the more recent Waymo’s Pacifica minivan that was “involved” in an accident, but, prima facie, was not at fault. Waymo’s vehicle was “at the wrong place, at the wrong time” (per initial police reports).

Clearly, these are not the last accidents that have occurred; there will, sadly, be other accidents.

What should be done with these?

As regulations go, we are in new territory with respect to self-driving vehicles. The legality of at-fault will be discussed in detail. NTSB continues its investigations. But meanwhile, there is some hope, right from the tech industry.

Voyage, the self-driving taxi, has released its Open Autonomous Safety (OAS) initiative. See Open-Sourcing Our Approach to Autonomous Safety – Voyage. It is the first in industry to open-source its safety tests for self-driving cars. As an initiative this is to be lauded if the industry is to make (faster) progress on both technology as well as safety.

The test suite consists of series of tests for scenario testing, functional safety, autonomy assessment and a testing toolkit.

I hope this is a start and that other industry players will either cooperate and/or release their own test suites.

More on this as it progresses. I will be watching this space with interest.

References:

- CNN, “Uber self-driving car kills pedestrian in first fatal autonomous crash”, Uber pulls self-driving cars after first fatal crash of autonomous vehicle

- Reuters, Uber sets safety review; media report says software cited in fatal crash | Reuters

- TechCrunch, Uber vehicle reportedly saw but ignored woman it struck – TechCrunch

- ArsTechnica, Report: Software bug led to death in Uber’s self-driving crash | Ars Technica

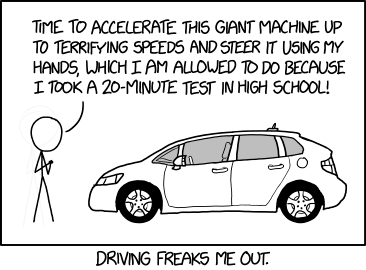

- XKCD, xkcd: Driving Cars

- Voyage Autonomous Safety, Voyage - Open Autonomous Safety - Collaborating for a safe autonomous future